Introduction:

Artificial Intelligence (AI) has emerged as a transformative force, revolutionizing various aspects of our lives. From healthcare and transportation to finance and entertainment, AI technologies have the potential to enhance productivity, streamline processes, and bring about significant societal benefits. However, as AI continues to advance, questions surrounding its ethical implications have become increasingly prominent. This article delves into the multifaceted field of AI ethics, exploring its key concerns, challenges, and potential solutions.

Defining AI Ethics:

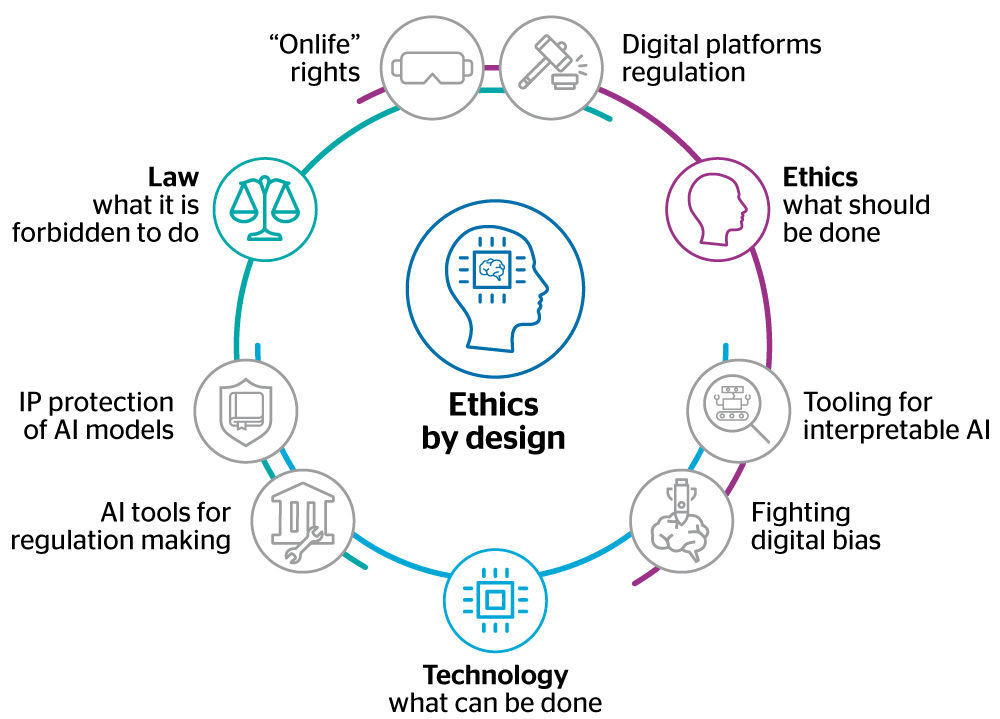

AI ethics refers to the moral principles and guidelines that govern the development, deployment, and use of AI systems. It aims to ensure that AI is designed and implemented in a manner that aligns with human values, promotes fairness, transparency, accountability, privacy, and avoids potential harm to individuals or society as a whole. While AI offers immense potential, the ethical considerations surrounding its deployment are critical to prevent unintended consequences and misuse.

Key Ethical Concerns:

Bias and Discrimination:

AI systems are trained on vast amounts of data, and if that data is biased, the AI algorithms can inadvertently perpetuate and amplify existing biases, leading to discrimination. This bias can manifest in various domains, such as facial recognition, hiring processes, and criminal justice systems, creating disparities and perpetuating social inequalities.

Privacy and Data Security:

AI relies on data, often personal and sensitive, to learn and make decisions. The collection, storage, and use of this data raise concerns about privacy and data security. It is crucial to establish robust safeguards to protect individuals’ information and ensure it is used responsibly.

Accountability and Transparency:

As AI systems become more autonomous and make decisions with minimal human intervention, questions arise about who is responsible for their actions. The lack of transparency in AI algorithms makes it challenging to understand and explain their decision-making processes, which can hinder accountability.

Job Displacement and Economic Impact:

The widespread adoption of AI technologies has the potential to automate tasks traditionally performed by humans, leading to job displacement. This raises concerns about unemployment, economic inequality, and the need to re-skill and up-skill the workforce to adapt to the changing job market.

Autonomous Weapons and Safety:

The development of autonomous weapons systems raises ethical concerns about the potential for AI-powered weapons to operate independently, potentially leading to unintended harm, loss of control, and escalation of conflicts. Striking a balance between the use of AI in defense and ensuring human oversight is crucial.

Addressing Ethical Challenges:

Fairness and Bias Mitigation:

AI developers must actively identify and address biases in training data and algorithms. Transparent and diverse data sets, algorithmic audits, and ongoing evaluation can help mitigate bias and promote fairness in AI systems. Collaboration between interdisciplinary teams and engaging diverse stakeholders is essential in this process.

Privacy and Data Governance:

Stricter regulations and frameworks that govern the collection, use, and sharing of data are necessary to protect individuals’ privacy. Implementing privacy-preserving techniques, anonymization, and secure data storage methods can help strike a balance between data utility and privacy.

Explainability and Accountability:

Encouraging the development of explainable AI models is crucial for understanding the decision-making processes of AI systems. Promoting standards and regulations that mandate transparency, disclosure, and auditing of AI algorithms can enhance accountability and mitigate risks associated with AI deployment.

Ethical Standards and Regulation:

Developing comprehensive ethical frameworks, guidelines, and international agreements is essential to ensure responsible AI development and deployment. Collaboration between governments, academia, industry, and civil society can help shape policies that foster innovation while upholding ethical principles.

Human-Centered AI Design:

Prioritizing human values and well-being in AI design is crucial. Integrating multidisciplinary perspectives, including ethics, social sciences, and philosophy, during the development process can help create AI systems that are aligned with human needs and values.

Conclusion:

As artificial intelligence continues to permeate various domains of society, addressing the ethical implications becomes paramount. Navigating the boundaries of AI innovation requires a collective effort to develop and implement ethical guidelines and regulations. Striking a balance between technological progress and societal well-being is imperative to harness the potential of AI for the betterment of humanity. By fostering interdisciplinary collaboration, transparency, accountability, and a commitment to human-centered design, we can shape a future where AI serves as a force for good, empowering individuals and advancing society as a whole.